GSoC'23 Chronicles: Coding Week Five(5)

Hi guys!!

Welcome to my latest blog post! Today, I'm excited to share the incredible progress I've made during the fifth week of the Google Summer of Code (GSOC) program. I apologize for the slight delay in posting this update as it covers the progress from last week. Rest assured, I'll make sure to share the highlights of the current week promptly at the beginning of next week. Thank you for your unwavering support and for joining me on this weekly journey through my GSOC journey.

In my previous blog post covering coding weeks 3 and 4, I outlined my primary objective of developing a baseline model for the initial dataset. Additionally, I hinted at the exciting challenge of working with a larger and more intricate dataset—specifically, the breast cancer dataset sourced from the cBio portal. I am thrilled to share that I have successfully accomplished these milestones, yielding noteworthy results. Now, let's dive right into the developments of the past week.

My Progress this Week

My primary focus during this phase was to preprocess the breast cancer dataset, ensuring compatibility with PyTorch Geometric. Since I had already gone through this process with the acc_data, adapting it to the new dataset was relatively straightforward.

To accommodate the dataset's large size of 1082 instances, I performed a minor inclusion by splitting it into training, testing, and validation sets using the 60:20:20 ratio. This allowed for a balanced distribution of data across the subsets.

With this split in place, I proceeded to train the Graph Neural Network (GNN) model on the data, which resulted in a final Mean Squared Error (MSE) of 705. Due to the limitations of my Google Colab GPU, I had to utilize a batch size of 16, resulting in 55 training batches and 10 test batches. Unfortunately, attempting to use a larger batch size resulted in running out of space, interrupting the code execution.

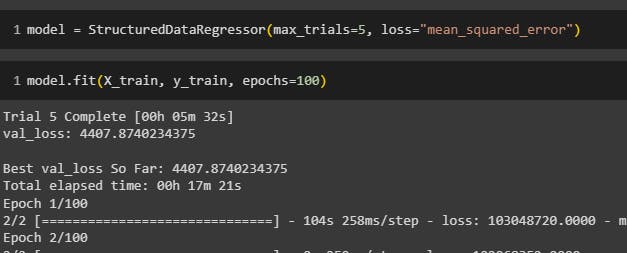

For the baseline modelling using the acc_dataset, I opted for the autokeras library. I conducted 5 trials, each consisting of 100 epochs, to explore different model configurations and identify the best-performing one. This is shown below:

The implementation of Deep Neural Networks (DNNs) on the graph-based breast cancer dataset resulted in a mean squared error (MSE) of approximately 4000. This outcome was expected, as DNNs might not be the most suitable models for graph datasets. However, I have devised a plan to overcome this limitation by exploring other AutoML models with increased max_trials.

To summarize the progress made thus far:

Breast Cancer Dataset Preprocessing: I employed the same preprocessing steps used for the initial dataset. I also created a validation set and adjusted the batch size to accommodate the larger dataset. I obtained an improved MSE after training the GNN model.

Baseline Modeling with AutoKeras: I applied AutoKeras, an automated machine learning library, to the acc_dataset that was preprocessed during the initial weeks of my project. The current implementation involved running five trials, each spanning 100 epochs.

Challenges encountered

Throughout this week, I faced a notable challenge during the GNN modelling phase. Initially, I encountered a series of errors that impeded my progress. It became apparent that my model was not utilizing the GPU, which significantly affected its performance. However, I was fortunate to receive assistance from a helpful friend who guided me in resolving this issue.

With their guidance, I successfully reconfigured the code to leverage the power of the Google Colab GPU. This crucial adjustment eradicated the errors I had previously encountered. Overcoming this challenge proved to be a valuable learning experience, reinforcing the significance of proper GPU utilization for optimal model execution.

My Plans for next week

Looking ahead to the next week, I have outlined two significant objectives that will be at the forefront of my focus:

InMemory Dataset Class: I plan to utilize the InMemory Dataset class to encapsulate the ACC dataset. This step is crucial to ensure the dataset meets the necessary requirements for seamless integration with PyTorch Geometric. By leveraging this class, I aim to enhance the dataset's compatibility and facilitate smooth data processing.

Creation of Detailed Notebooks: In order to assist others who may be interested in working with these datasets, I will be developing comprehensive notebooks that outline the preprocessing steps for each dataset. These detailed notebooks will serve as valuable resources, providing clear instructions and insights into the preprocessing techniques employed. They will empower others to replicate and build upon my work effectively.

I am excited to share more about the progress made on these milestones in my upcoming blog post for coding week 6. Stay tuned to discover the latest advancements gained during this phase of the project.

I warmly invite you to join me next week as I continue to share the latest updates and insights. Until then, thank you for reading to the end!

(P.S. If you are interested in knowing more about my project, feel free to check it out on GitHub. https://github.com/cannin/gsoc_2023_pytorch_pathway_commons)